We proposed an architecture design named Super-Label which can improve the segmentation result, and a data splitting method named Mini-Data to encourage the diversity of data. We have demonstrated the efficacy of our approaches with a mean dice score of 0.78 on only 20% of the total data. Our network generalizes well on unseen test data without ovefitting compared to previous year’s winner.

Challenge

Example images from training and validation set with objects of interest enclosed in blue rectangles. (a)(b) Training and validation images of the suction tool, (c)(d) Training and validation image of the ultrasound probe

Data Augmentation

Augmentation:

Horizontal flip

Affine transformation with 0.1 scale limit, 0.1 shift limit and 30 degrees rotation limit

Cutout

Image Augmentation

Random brightness, Random Gamma, Random Contrast, Random HSV

Random spotlight

Motion blur

MICCAI EndoVis2018:

13 sequences of video sampled at 5 Hz, each sequence containing 150 frames, totaling 1950 images

Surgical setting:

Partial nephrectomy in pig test

Classes: 12 categories of objects

Architecture

Super-Label

Adopted from [4], we introducing prior semantic information which specifies which classes are similar

Prior information is used in loss function to make learning more efficient

l= λ ∙l_(super_label)+l_(sub_label)

Achieved by adding two different classification layers - super and sub - in the network, with the same encoding and decoding path (Figure 4)

Inference time, super and sub scores are multiplied to assign the class with maximum value

s_(total,i,j)=s_(super,i)+s_(sub,j)

where s is the score, j is the j-th sub class under i-th super class.

In our experiment, there are two super classes:

Tool with sublabels [1,2,3,6,7,8,9,11] in table above

Background with sublabels [0,4,5,10] in table above

Mini-Data

Challenge to predict an object never seen due to the limited training data available (2235 image in total)

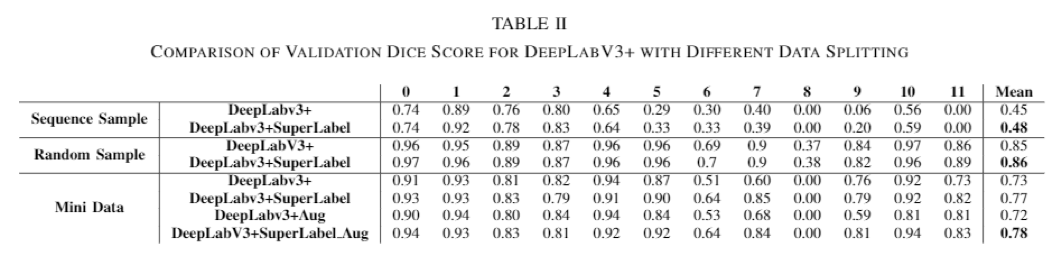

Randomly sampling frames from all video sequences and validate on other frames produce good result (dice=0.86, Table II row 4), indicating strong sign of overfitting

Randomly sampling video sequences and validate on other sequences produce bad result (dice=0.53, Table I row 3), indicating distinct distribution of data (Figure 6)

Thus, we proposed to split data and validation by sampling only 20% of a given video sequence and generalizing the network to the rest of 80% in order to include more diversity with the same amount of annotated data.

Experiment

•Hyper Parameters: as shown in Figure 5

•Data Processing: resized to 320x256 from 1280x1024

•Sequence Sample: Sequences 4,5,11 left out for validation, serves as lower limit

•Random Sample: Split the data by randomly leaving 20% frames out for validation, serves as upper limit

•Mini Data: uniformly sampled 20% of frames from each video sequence

Result

Comparison with previous year’s team

Comparison with previous year’s team